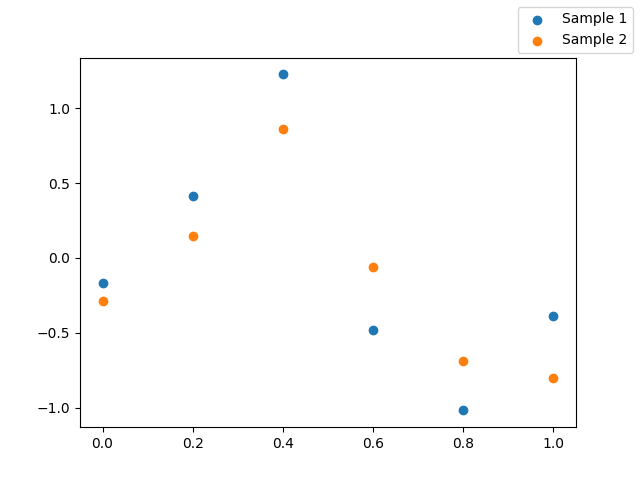

Here we will compare different interpolation strategies. Two-dimensional interpolation finds its way in many applications like image processing and digital terrain modeling.

Prone to multicollinearity: Before applying Linear regression, multicollinearity should be removed (using dimensionality reduction techniques) because it assumes that there is no relationship among independent variables.

So, outliers should be analyzed and removed before applying Linear Regression to the dataset.Ĥ. Prone to outliers: Linear regression is very sensitive to outliers (anomalies). Prone to noise and overfitting: If the number of observations are lesser than the number of features, Linear Regression should not be used, otherwise it may lead to overfit because is starts considering noise in this scenario while building the model.ģ. It assumes that there is a straight-line relationship between the dependent and independent variables which is incorrect many times.Ģ. In the real world, the data is rarely linearly separable. Main limitation of Linear Regression is the assumption of linearity between the dependent variable and the independent variables. Linear Regression is prone to over-fitting but it can be easily avoided using some dimensionality reduction techniques, regularization (L1 and L2) techniques and cross-validation.ġ. Linear Regression is easier to implement, interpret and very efficient to train.ģ.

We can use it to find the nature of the relationship among the variables.Ģ. Linear Regression performs well when the dataset is linearly separable.

Following are the advantages and disadvantage of Linear Regression:ġ. Linear Regression is a supervised machine learning algorithm which is very easy to learn and implement.

0 kommentar(er)

0 kommentar(er)